The goals / steps of this project are the following:

- Perform a Histogram of Oriented Gradients (HOG) feature extraction on a labeled training set of images and train a classifier Linear SVM classifier.

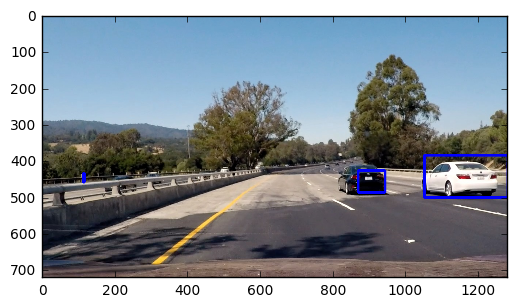

- Implement a sliding-window technique and use the trained classifier to search for vehicles in images.

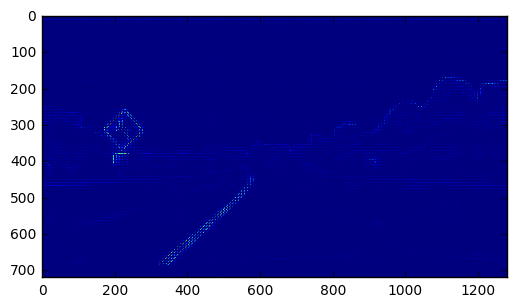

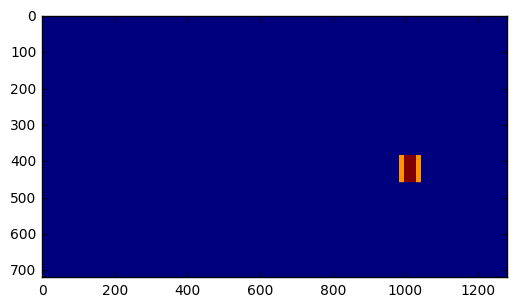

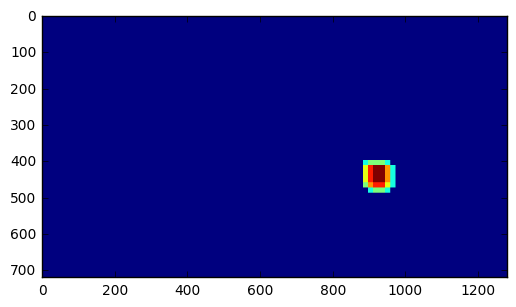

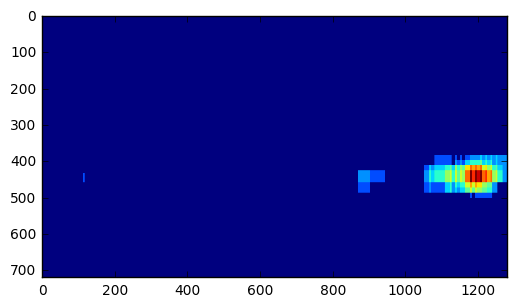

- Run the pipeline on a video stream and create a heat map of recurring detections frame by frame to reject outliers and follow detected vehicles.

- Estimate a bounding box for vehicles detected.

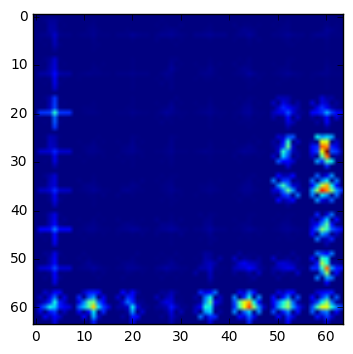

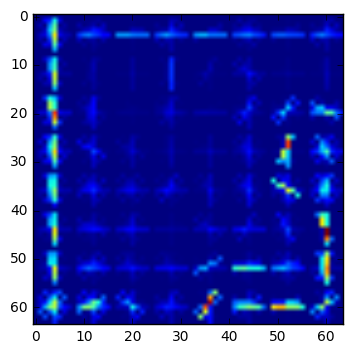

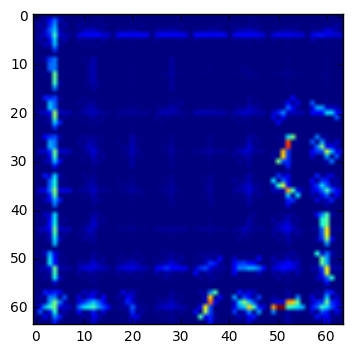

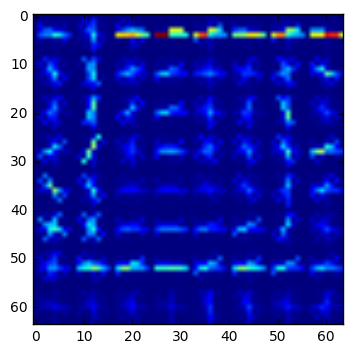

Explanation of how I extracted HOG features from the training images.

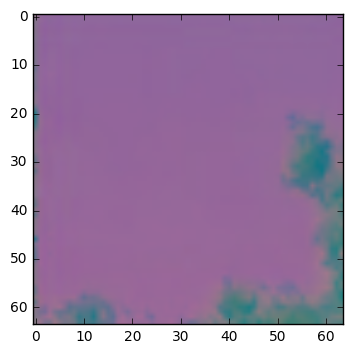

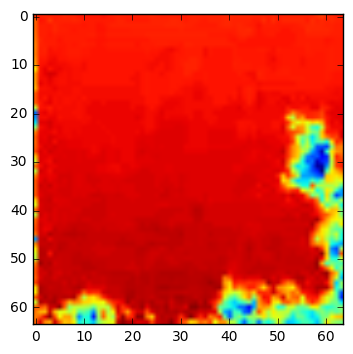

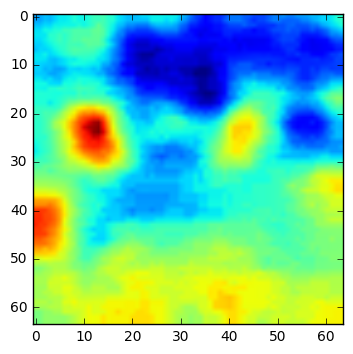

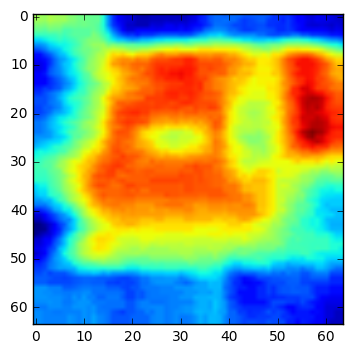

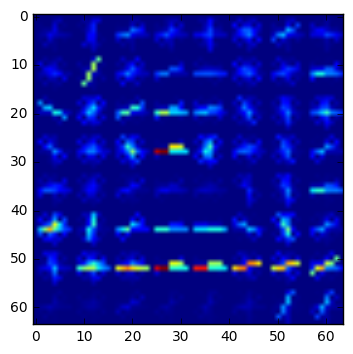

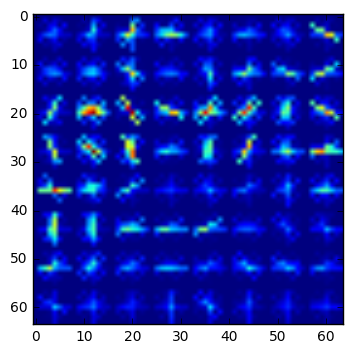

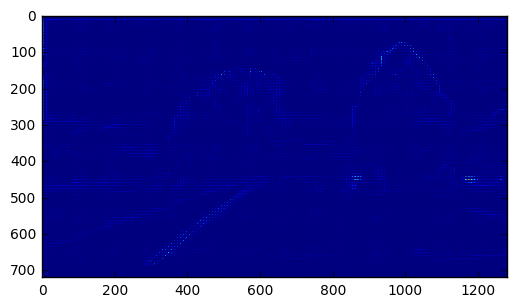

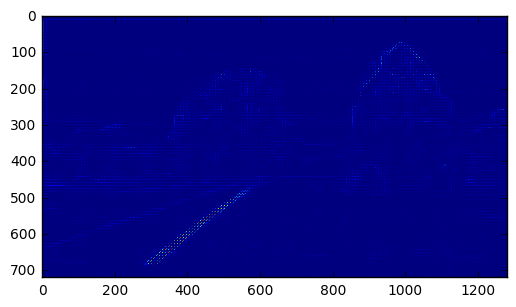

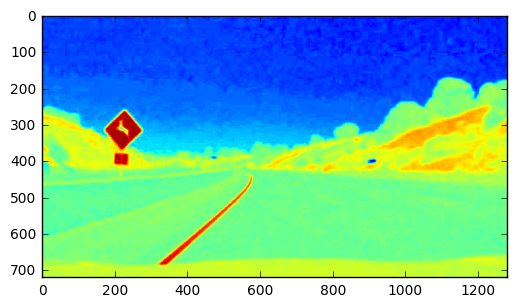

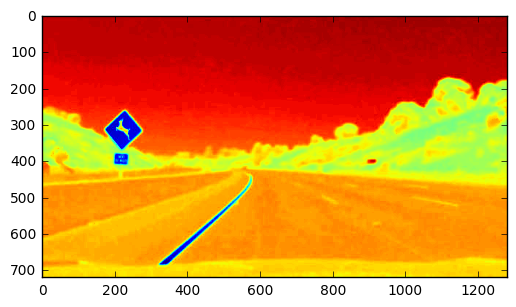

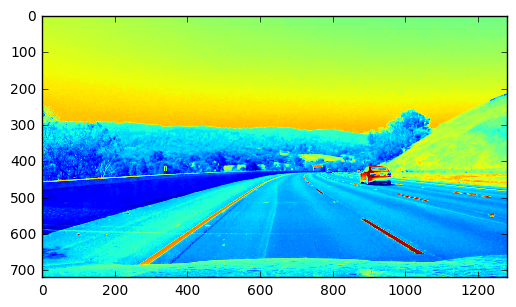

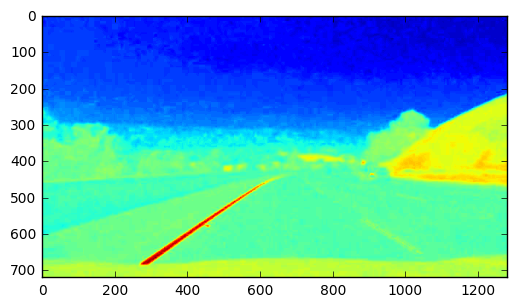

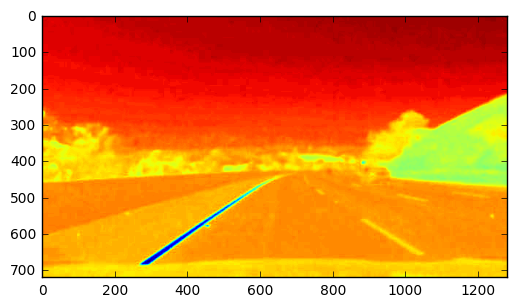

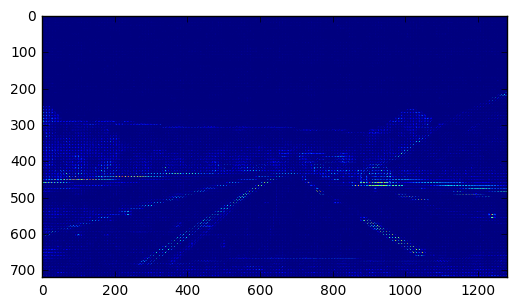

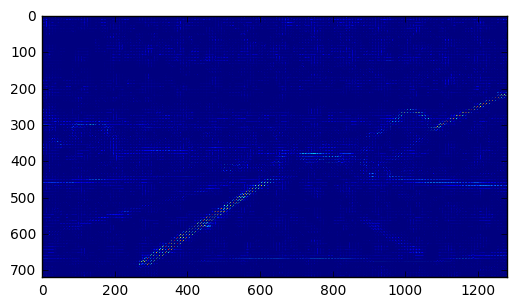

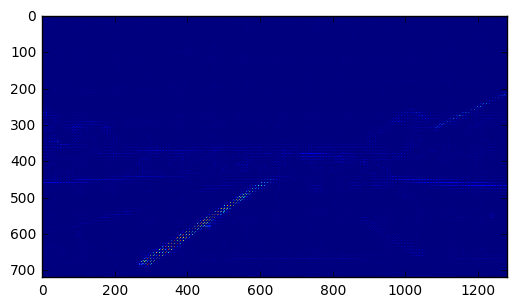

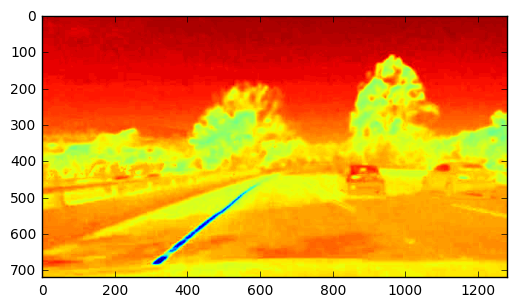

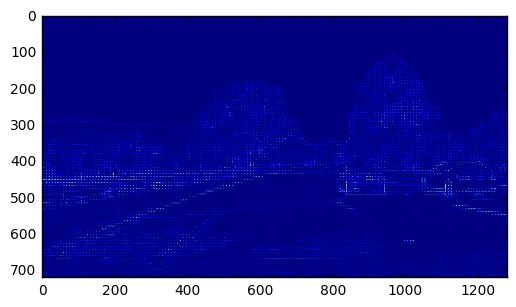

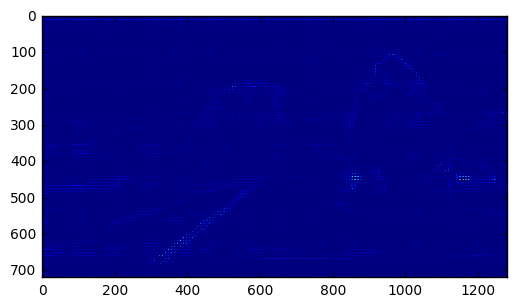

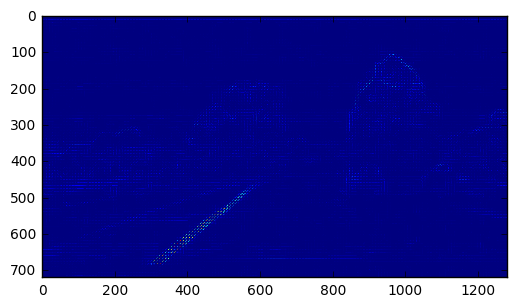

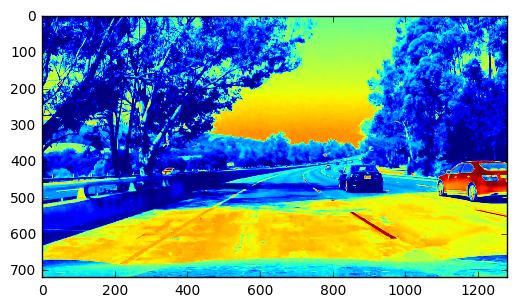

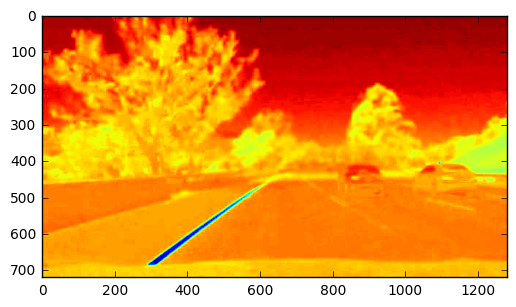

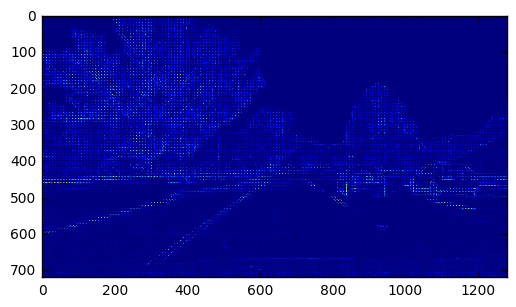

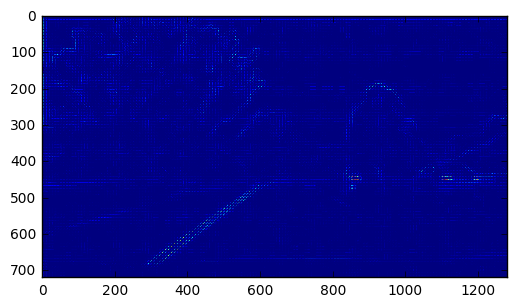

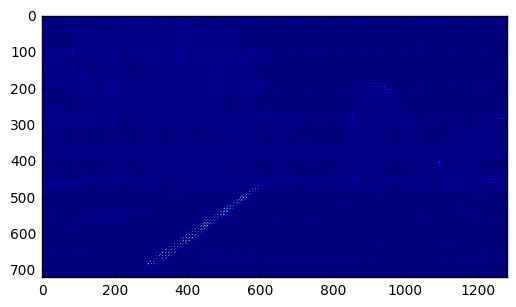

Since the images in the training data are in .png format, I loaded them in using matplotlib.image so that they are already normalized. This means that we do not need an additional normalization step when training the classifier. After reading in the images, I converted the features to the YCrCb color channel since it produced the best results. I then extracted the HOG features using the function get_hog_features. Below are examples of HOG feature extraction from both vehicle and non vehicle images.

Explanation of how I settled on my final choice of HOG parameters.

I used all 3 channels with 18 orientations, 8 pixels per cell and 2 cells per block. This configuration allowed for the best balance between accuracy and speed.

Description of how I trained a classifier using my selected HOG features.

First, each image in the training set was converted to a horizontal feature vector. Then the dataset was split between between training and testing data with 20% of the data being used for the test set. An SVM classifier was used to train the classifier.

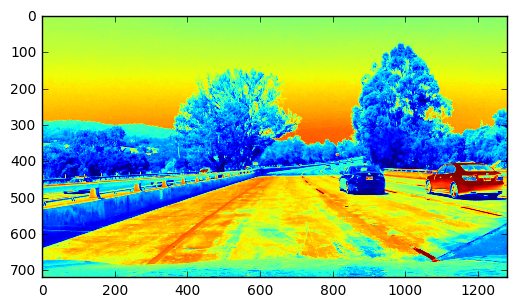

Description of how I implemented a sliding window search and how I decided what scales to search and how much to overlap window.

The sliding window technique was used to detect vehicles. First, each image is passed into the function gethotwindows() that detects vehicles in the image. Since the upper half of each image mostly contains the sky, only the bottom half of the image is used to search for vehicles. As for the x axis, information from the previous image is stored so that we minimize the range over which we search for cars in the x axis. I used a window size of 75 x 75 with an overlap of 80%. After that, the HOG features are extracted and pushed into the classifier that determines whether a vehicle is present or not.

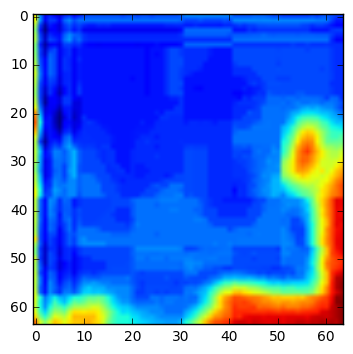

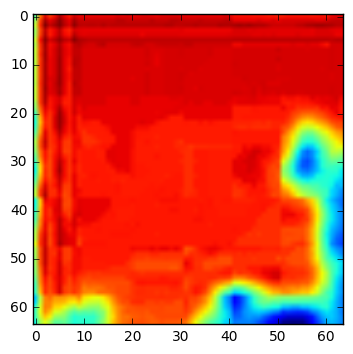

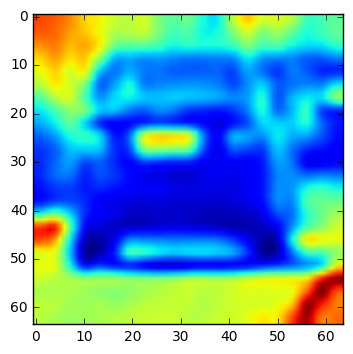

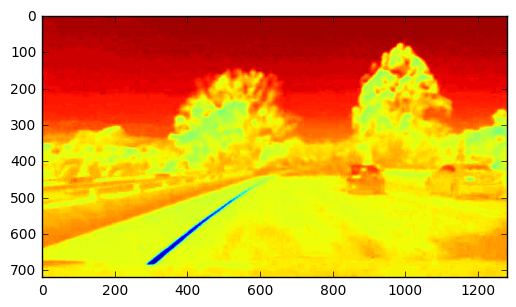

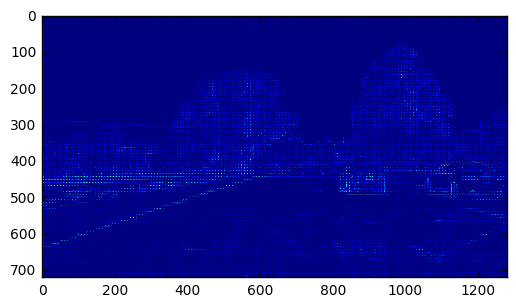

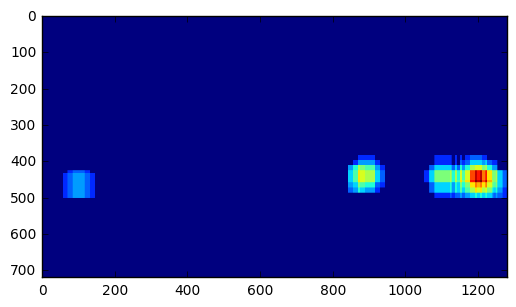

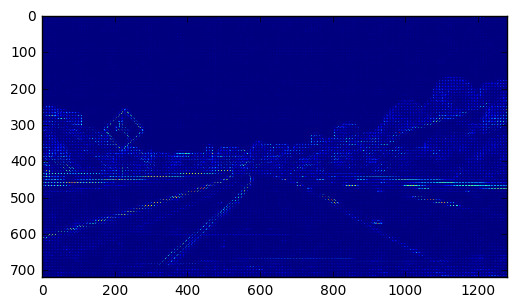

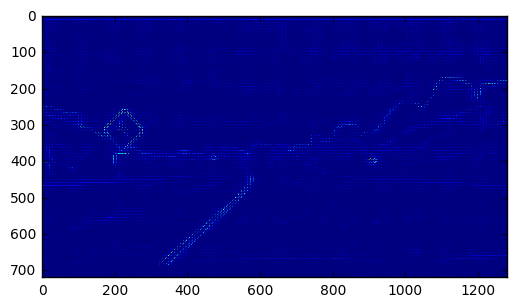

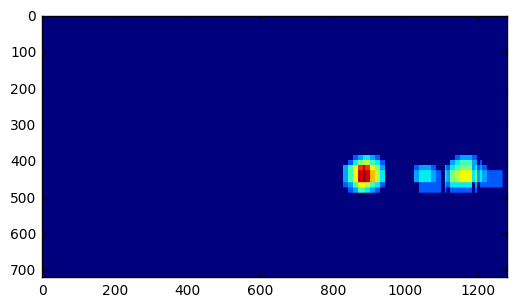

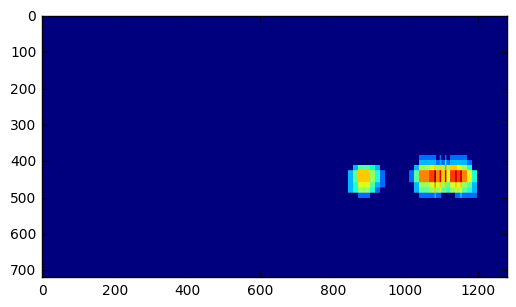

Description of how I implemented some kind of filter for false positives and some method for combining overlapping bounding boxes.

First, a heatmap for each of the dectections is created using the function add_heat(). A moving list of the last 10 heatmap images is generated and updated. At the final output heatmap, an average of the last 10 heatmaps is created which helps us reduce false positives. A detection in one image but not in any others gets averaged out. Then in order to ensure that we do not get any false positives, the heatmap is thresholded to allow output where we see an overlap of at 2 heatmaps.

Brief discussion on any problems / issues I faced in your implementation of this project. Where will the pipeline likely fail? What could I do to make it more robust?

Although it is good, the vehicle detection piepline is still not perfect. Sometimes it takes several seconds to detect a car that is present in the images. Other times, it mistakenly classifies 2 vehicles as 1 when they are close to each other. The processing time to create the output video is also very long, which makes it impractical for real-time applications. In order to make it more robust, a better classifier such as neural nets can be used.

Link to your final video output.

Here's the link to my video result

# Load libraries

import glob

import time

import cv2

import matplotlib.image as mpimg

import matplotlib.pyplot as plt

import numpy as np

from skimage.feature import hog

from sklearn.externals import joblib

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import StandardScaler

from sklearn.svm import LinearSVC

import pickle

from scipy.ndimage.measurements import label

% matplotlib inline

# Define hyperparameters

color_space = 'YCrCb' # Can be RGB, HSV, LUV, HLS, YUV, YCrCb

orient = 18 # HOG orientations

pix_per_cell = 8 # HOG pixels per cell

cell_per_block = 2 # HOG cells per block

hog_channel = "ALL" # Can be 0, 1, 2, or "ALL"

spatial_size = (64, 64) # Spatial binning dimensions

hist_bins = 64 # Number of histogram bins

spatial_feat = False # Spatial features on or off

hist_feat = False # Histogram features on or off

hog_feat = True # HOG features on or off

# Define a function to return HOG features and visualization

def get_hog_features(img, orient, pix_per_cell, cell_per_block,

vis=False, feature_vec=True):

# Call with two outputs if vis==True

if vis == True:

features, hog_image = hog(img, orientations=orient,

pixels_per_cell=(pix_per_cell, pix_per_cell),

cells_per_block=(cell_per_block, cell_per_block),

transform_sqrt=True,

visualise=vis, feature_vector=feature_vec)

return features, hog_image

# Otherwise call with one output

else:

features = hog(img, orientations=orient,

pixels_per_cell=(pix_per_cell, pix_per_cell),

cells_per_block=(cell_per_block, cell_per_block),

transform_sqrt=True,

visualise=vis, feature_vector=feature_vec)

return features

# Define a function to compute binned color features

def bin_spatial(img, size=(32, 32)):

# Use cv2.resize().ravel() to create the feature vector

features = cv2.resize(img, size).ravel()

# Return the feature vector

return features

# Define a function to compute color histogram features

# NEED TO CHANGE bins_range if reading .png files with mpimg!

def color_hist(img, nbins=32, bins_range=(0, 256)):

# Compute the histogram of the color channels separately

channel1_hist = np.histogram(img[:,:,0], bins=nbins, range=bins_range)

channel2_hist = np.histogram(img[:,:,1], bins=nbins, range=bins_range)

channel3_hist = np.histogram(img[:,:,2], bins=nbins, range=bins_range)

# Concatenate the histograms into a single feature vector

hist_features = np.concatenate((channel1_hist[0], channel2_hist[0], channel3_hist[0]))

# Return the individual histograms, bin_centers and feature vector

return hist_features

# Define a function to extract features from a list of images

# Have this function call bin_spatial() and color_hist()

def extract_features(imgs, color_space='RGB', spatial_size=(32, 32),

hist_bins=32, orient=9,

pix_per_cell=8, cell_per_block=2, hog_channel=0,

spatial_feat=True, hist_feat=True, hog_feat=True):

# Create a list to append feature vectors to

features = []

# Iterate through the list of images

for file in imgs:

file_features = []

# Read in each one by one

image = mpimg.imread(file)

# apply color conversion if other than 'RGB'

if color_space != 'RGB':

if color_space == 'HSV':

feature_image = cv2.cvtColor(image, cv2.COLOR_RGB2HSV)

elif color_space == 'LUV':

feature_image = cv2.cvtColor(image, cv2.COLOR_RGB2LUV)

elif color_space == 'HLS':

feature_image = cv2.cvtColor(image, cv2.COLOR_RGB2HLS)

elif color_space == 'YUV':

feature_image = cv2.cvtColor(image, cv2.COLOR_RGB2YUV)

elif color_space == 'YCrCb':

feature_image = cv2.cvtColor(image, cv2.COLOR_RGB2YCrCb)

else: feature_image = np.copy(image)

if spatial_feat == True:

spatial_features = bin_spatial(feature_image, size=spatial_size)

file_features.append(spatial_features)

if hist_feat == True:

# Apply color_hist()

hist_features = color_hist(feature_image, nbins=hist_bins)

file_features.append(hist_features)

if hog_feat == True:

# Call get_hog_features() with vis=False, feature_vec=True

if hog_channel == 'ALL':

hog_features = []

for channel in range(feature_image.shape[2]):

hog_features.append(get_hog_features(feature_image[:,:,channel],

orient, pix_per_cell, cell_per_block,

vis=False, feature_vec=True))

hog_features = np.ravel(hog_features)

else:

hog_features = get_hog_features(feature_image[:,:,hog_channel], orient,

pix_per_cell, cell_per_block, vis=False, feature_vec=True)

# Append the new feature vector to the features list

file_features.append(hog_features)

features.append(np.concatenate(file_features))

# Return list of feature vectors

return features

# Define a function that takes an image,

# start and stop positions in both x and y,

# window size (x and y dimensions),

# and overlap fraction (for both x and y)

def slide_window(img, x_start_stop=[None, None], y_start_stop=[None, None],

xy_window=(64, 64), xy_overlap=(0.5, 0.5), window_list = None):

# If x and/or y start/stop positions not defined, set to image size

if x_start_stop[0] == None:

x_start_stop[0] = 0

if x_start_stop[1] == None:

x_start_stop[1] = img.shape[1]

if y_start_stop[0] == None:

y_start_stop[0] = 0

if y_start_stop[1] == None:

y_start_stop[1] = img.shape[0]

# Compute the span of the region to be searched

xspan = x_start_stop[1] - x_start_stop[0]

yspan = y_start_stop[1] - y_start_stop[0]

# Compute the number of pixels per step in x/y

nx_pix_per_step = np.int(xy_window[0]*(1 - xy_overlap[0]))

ny_pix_per_step = np.int(xy_window[1]*(1 - xy_overlap[1]))

# Compute the number of windows in x/y

nx_windows = np.int(xspan/nx_pix_per_step) - 1

ny_windows = np.int(yspan/ny_pix_per_step) - 1

# Initialize a list to append window positions to

if window_list == None:

window_list = []

# Loop through finding x and y window positions

# Note: you could vectorize this step, but in practice

# you'll be considering windows one by one with your

# classifier, so looping makes sense

for ys in range(ny_windows):

for xs in range(nx_windows):

# Calculate window position

startx = xs*nx_pix_per_step + x_start_stop[0]

endx = startx + xy_window[0]

starty = ys*ny_pix_per_step + y_start_stop[0]

endy = starty + xy_window[1]

# Append window position to list

window_list.append(((startx, starty), (endx, endy)))

# Return the list of windows

return window_list

# Define a function to draw bounding boxes

def draw_boxes(img, bboxes, color=(0, 0, 255), thick=6):

# Make a copy of the image

imcopy = np.copy(img)

# Iterate through the bounding boxes

for bbox in bboxes:

# Draw a rectangle given bbox coordinates

cv2.rectangle(imcopy, bbox[0], bbox[1], color, thick)

# Return the image copy with boxes drawn

return imcopy

# Define a function to extract features from a single image window

# This function is very similar to extract_features()

# just for a single image rather than list of images

def single_img_features(img, color_space='RGB', spatial_size=(32, 32),

hist_bins=32, orient=9,

pix_per_cell=8, cell_per_block=2, hog_channel=0,

spatial_feat=True, hist_feat=True, hog_feat=True):

#1) Define an empty list to receive features

img_features = []

#2) Apply color conversion if other than 'RGB'

if color_space != 'RGB':

if color_space == 'HSV':

feature_image = cv2.cvtColor(img, cv2.COLOR_RGB2HSV)

elif color_space == 'LUV':

feature_image = cv2.cvtColor(img, cv2.COLOR_RGB2LUV)

elif color_space == 'HLS':

feature_image = cv2.cvtColor(img, cv2.COLOR_RGB2HLS)

elif color_space == 'YUV':

feature_image = cv2.cvtColor(img, cv2.COLOR_RGB2YUV)

elif color_space == 'YCrCb':

feature_image = cv2.cvtColor(img, cv2.COLOR_RGB2YCrCb)

else: feature_image = np.copy(img)

#3) Compute spatial features if flag is set

if spatial_feat == True:

spatial_features = bin_spatial(feature_image, size=spatial_size)

#4) Append features to list

img_features.append(spatial_features)

#5) Compute histogram features if flag is set

if hist_feat == True:

hist_features = color_hist(feature_image, nbins=hist_bins)

#6) Append features to list

img_features.append(hist_features)

#7) Compute HOG features if flag is set

if hog_feat == True:

if hog_channel == 'ALL':

hog_features = []

for channel in range(feature_image.shape[2]):

hog_features.extend(get_hog_features(feature_image[:,:,channel],

orient, pix_per_cell, cell_per_block,

vis=False, feature_vec=True))

else:

hog_features = get_hog_features(feature_image[:,:,hog_channel], orient,

pix_per_cell, cell_per_block, vis=False, feature_vec=True)

#8) Append features to list

img_features.append(hog_features)

#9) Return concatenated array of features

return np.concatenate(img_features)

# Define a function you will pass an image

# and the list of windows to be searched (output of slide_windows())

def search_windows(img, windows, clf, scaler, color_space='RGB',

spatial_size=(32, 32), hist_bins=32,

hist_range=(0, 256), orient=9,

pix_per_cell=8, cell_per_block=2,

hog_channel=0, spatial_feat=True,

hist_feat=True, hog_feat=True):

#1) Create an empty list to receive positive detection windows

on_windows = []

#2) Iterate over all windows in the list

for window in windows:

#3) Extract the test window from original image

test_img = cv2.resize(img[window[0][1]:window[1][1], window[0][0]:window[1][0]], (64, 64))

#4) Extract features for that window using single_img_features()

features = single_img_features(test_img, color_space=color_space,

spatial_size=spatial_size, hist_bins=hist_bins,

orient=orient, pix_per_cell=pix_per_cell,

cell_per_block=cell_per_block,

hog_channel=hog_channel, spatial_feat=spatial_feat,

hist_feat=hist_feat, hog_feat=hog_feat)

#5) Scale extracted features to be fed to classifier

test_features = scaler.transform(np.array(features).reshape(1, -1))

#6) Predict using your classifier

prediction = clf.predict(test_features)

#7) If positive (prediction == 1) then save the window

if prediction == 1:

on_windows.append(window)

#8) Return windows for positive detections

return on_windows

# Train model

def train():

vehiclesFiles = glob.glob("vehicles/*/*.png", recursive=True)

Vehicles = []

for image in vehiclesFiles:

Vehicles.append(image)

nonVehiclesFiles = glob.glob("non-vehicles/*/*.png", recursive=False)

notVehicles = []

for image in nonVehiclesFiles:

notVehicles.append(image)

Vehicles_features = extract_features(Vehicles, color_space=color_space,

spatial_size=spatial_size, hist_bins=hist_bins,

orient=orient, pix_per_cell=pix_per_cell,

cell_per_block=cell_per_block,

hog_channel=hog_channel, spatial_feat=spatial_feat,

hist_feat=hist_feat, hog_feat=hog_feat)

notVehicles_features = extract_features(notVehicles, color_space=color_space,

spatial_size=spatial_size, hist_bins=hist_bins,

orient=orient, pix_per_cell=pix_per_cell,

cell_per_block=cell_per_block,

hog_channel=hog_channel, spatial_feat=spatial_feat,

hist_feat=hist_feat, hog_feat=hog_feat)

X = np.vstack((Vehicles_features, notVehicles_features)).astype(np.float64)

X_scaler = StandardScaler().fit(X)

scaled_X = X_scaler.transform(X)

y = np.hstack((np.ones(len(Vehicles_features)), np.zeros(len(notVehicles_features))))

rand_state = np.random.randint(0, 100)

X_train, X_test, y_train, y_test = train_test_split(

scaled_X, y, test_size=0.2, random_state=rand_state)

svm = LinearSVC()

svm.fit(X_train, y_train)

return X_scaler, svm

X_scaler, svm = train()

def add_heat(heatmap, bbox_list):

# Iterate through list of bboxes

for box in bbox_list:

# Add += 1 for all pixels inside each bbox

# Assuming each "box" takes the form ((x1, y1), (x2, y2))

heatmap[box[0][1]:box[1][1], box[0][0]:box[1][0]] += 1

# Return updated heatmap

return heatmap

def apply_threshold(heatmap, threshold):

# Zero out pixels below the threshold

heatmap[heatmap <= threshold] = 0

# Return thresholded map

return heatmap

def draw_labeled_bboxes(img, labels):

# Iterate through all detected cars

for car_number in range(1, labels[1]+1):

# Find pixels with each car_number label value

nonzero = (labels[0] == car_number).nonzero()

# Identify x and y values of those pixels

nonzeroy = np.array(nonzero[0])

nonzerox = np.array(nonzero[1])

# Define a bounding box based on min/max x and y

bbox = ((np.min(nonzerox), np.min(nonzeroy)), (np.max(nonzerox), np.max(nonzeroy)))

# Draw the box on the image

cv2.rectangle(img, bbox[0], bbox[1], (0,0,255), 6)

# Return the image

return img

def gethotwindows(image,previous=None,count=0):

y_start_stop = [int(image.shape[0]/2), image.shape[0]]

x_start_stop = [None, None]

windows = slide_window(image, x_start_stop=x_start_stop, y_start_stop=[int(image.shape[0]/2)+25,int(image.shape[0]/2+100)],

xy_window=(75, 75), xy_overlap=(0.8, 0.8),window_list=None)

slide_window(image, x_start_stop=x_start_stop, y_start_stop=[int(image.shape[0]/2+75), image.shape[0]],

xy_window=(100, 100), xy_overlap=(0.8, 0.8),window_list=windows)

hot_windows = search_windows(image, windows, svm, X_scaler, color_space=color_space,

spatial_size=spatial_size, hist_bins=hist_bins,

orient=orient, pix_per_cell=pix_per_cell,

cell_per_block=cell_per_block,

hog_channel=hog_channel, spatial_feat=spatial_feat,

hist_feat=hist_feat, hog_feat=hog_feat)

return hot_windows

def detect_vehicles(image,showheatmap=False,holder=None):

image_copy = np.copy(image)

image = image.astype(np.float32) / 255

count = 0

previousLabels = None

if holder is not None:

previousLabels = holder.labels

count = holder.iteration

hot_windows = gethotwindows(image,previous=previousLabels,count=count)

heat = np.zeros_like(image[:, :, 0]).astype(np.float)

heatmap = add_heat(heatmap=heat, bbox_list=hot_windows)

if holder is None:

holder = ImageHolder()

if len(holder.previousHeat)<holder.averageCount:

for i in range(holder.averageCount):

holder.previousHeat.append(np.copy(heatmap).astype(np.float))

holder.previousHeat[holder.iteration%holder.averageCount] = heatmap

total = np.zeros(np.array(holder.previousHeat[0]).shape)

for value in holder.previousHeat:

total = total + np.array(value)

averageHeatMap = total/holder.averageCount

averageHeatMap = apply_threshold(averageHeatMap,2)

if showheatmap:

plt.imshow(heatmap)

plt.show()

labels = label(averageHeatMap)

holder.labels = labels

holder.iteration = holder.iteration + 1

window_img = draw_labeled_bboxes(image_copy, labels)

return window_img,averageHeatMap

class ImageHolder:

def __init__(self):

self.previousHeat = []

self.labels = []

self.iteration = 0

self.averageCount = 10

imageHolder = ImageHolder()

def ProcessImage(image):

img,holder = detect_vehicles(image=image,holder=imageHolder)

return img

non_vehicle = "non-vehicles/Extras/extra1.png"

vehicle = "vehicles/GTI_Far/image0000.png"

def display_hog_img(file):

image = mpimg.imread(file)

feature_image = cv2.cvtColor(image, cv2.COLOR_RGB2YCrCb)

plt.imshow(feature_image)

plt.show()

plt.imshow(feature_image[:,:,0])

plt.show()

plt.imshow(feature_image[:,:,1])

plt.show()

plt.imshow(feature_image[:,:,2])

plt.show()

for channel in range(feature_image.shape[2]):

features, hog_image = get_hog_features(img=feature_image[:, :, channel],

orient=orient,

cell_per_block=cell_per_block,

pix_per_cell=pix_per_cell,vis=True)

plt.imshow(hog_image)

plt.show()

display_hog_img(non_vehicle)

display_hog_img(vehicle)

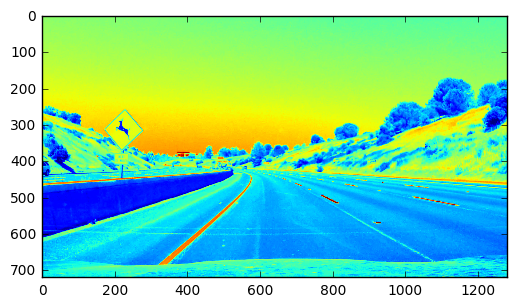

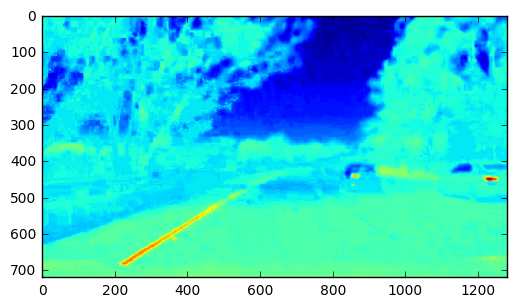

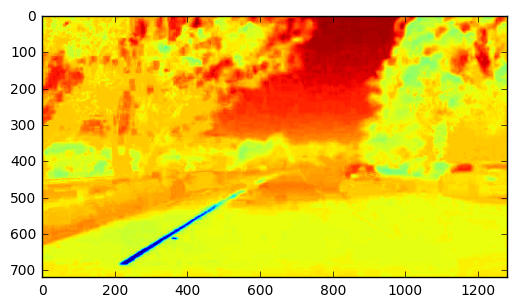

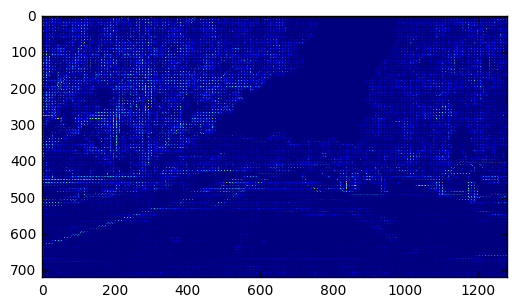

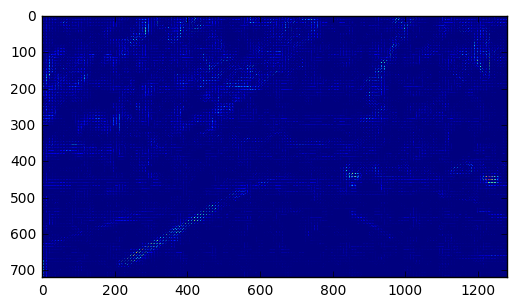

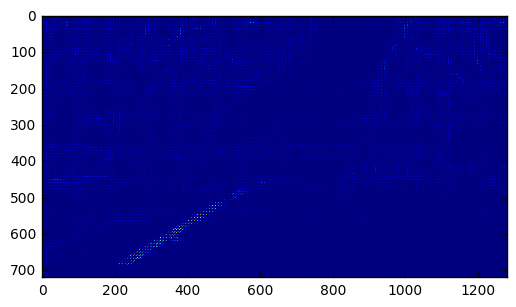

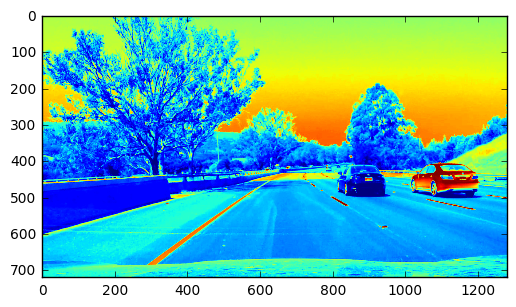

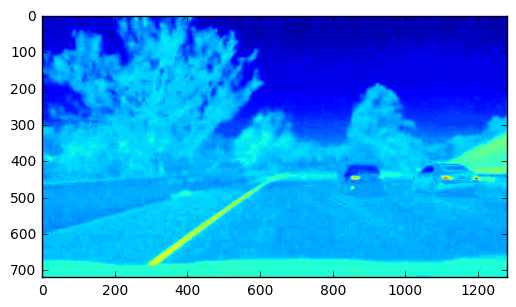

images = glob.glob("test_images/*")

for file in images:

image = mpimg.imread(file)

feature_image = cv2.cvtColor(image, cv2.COLOR_RGB2YCrCb)

plt.imshow(feature_image)

plt.show()

plt.imshow(feature_image[:,:,0])

plt.show()

plt.imshow(feature_image[:,:,1])

plt.show()

plt.imshow(feature_image[:,:,2])

plt.show()

for channel in range(feature_image.shape[2]):

features, hog_image = get_hog_features(img=feature_image[:, :, channel],

orient=orient,

cell_per_block=cell_per_block,

pix_per_cell=pix_per_cell,vis=True)

plt.imshow(hog_image)

plt.show()

window_img,heatmap = detect_vehicles(image)

plt.imshow(window_img)

plt.show()

plt.imshow(heatmap)

plt.show()

import imageio

from imageio.plugins import ffmpeg

from moviepy.editor import VideoFileClip

from IPython.display import HTML

imageHolder = ImageHolder()

white_output = 'output.mp4'

clip1 = VideoFileClip("project_video.mp4")

white_clip = clip1.fl_image(ProcessImage) #NOTE: this function expects color images!!

%time white_clip.write_videofile(white_output, audio=False)

[MoviePy] >>>> Building video output.mp4

[MoviePy] Writing video output.mp4

100%|█████████▉| 1260/1261 [2:40:51<00:07, 7.94s/it]

[MoviePy] Done.

[MoviePy] >>>> Video ready: output.mp4

CPU times: user 2h 39min 57s, sys: 46.3 s, total: 2h 40min 43s

Wall time: 2h 40min 51s